In this article

Configuration for Destination host

Sending jobs from offline host to source (HO)

Replication via Cloud File Storage can be used to send jobs to a destination DD with a dynamic address or to a destination DD host that is not online at all times. It can also be used if the source DD host cannot be accessed directly with a TCP/S port and only HTTP/S are allowed to be open in the HO environment. This can also be used to have a backup source DD handling incoming requests from destination DDs as both source DD services can read files waiting in Cloud Storage and generate the Download URL for the destination, just add the DD host names into the Aliases field.

When replicating with Azure mode, DD at HO or Source host will not try to send the job files to the destination DD hosts. Instead, the job files will be uploaded to Cloud File Storage and wait, with status None but with information that the host is Cloud Storage and files are waiting. Destination DD or the offline host will send a pull request to source DD Web Service at HO, asking for any jobs files waiting for it, and will download the files directly from Cloud Storage with download URL reference. This way, Source DD does not need to know the IP address of the Destination DD, only the destination DD needs to connect to Source with DD Web Service URL.

The job monitor at the source host gets a status update from the offline hosts as the destination DD will send a status update via DD Web Service back to the source DD.

Note: When sending jobs to offline locations, the Job Monitor at the source is not able to send any Re-Check Status requests. The only status update it will get is what the DD at the offline location will send back, as requests from source cannot make it to the offline location.

Configuration for Source host

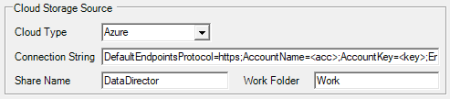

In the Configuration Tool, on the Data Config tab, choose the type of Cloud File Storage in Cloud Type list.

Azure

- Put the Azure Storage connection string, including Account Key, in the Connection String field.

- Set Share Name to the Azure share name in the storage container.

- To keep files in a separate folder, make a folder in the Share root and add the folder name to the Work Folder field. DD will create a folder for each host that is expecting data with the name of the host in this folder, or in the Share root, if no folder name is specified.

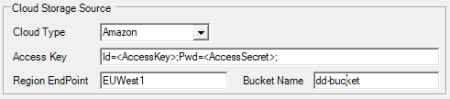

Amazon

- Put the Amazon AWS S3 Storage access key and secret in the Access Key field in the form of Id=<key>;Pwd=<secret>;

- Set region endpoint name in Region Endpoint.

- AWS bucket to use goes into the Buckt Name field. DD will separate files for each host that is expecting data by adding a host prefix to the AWS files key ID.

List of available AWS regions:

| AFSouth1 APEast1 APNortheast1 APNortheast2 APNortheast3 APSouth1 APSouth2 |

APSoutheast1 APSoutheast2 APSoutheast3 APSoutheast4 CACentral1 CNNorth1 CNNorthWest1 |

EUCentral1 EUCentral2 EUNorth1 EUSouth1 EUSouth2 EUWest1 EUWest2 |

EUWest3 ILCentral1 MECentral1 MESouth1 SAEast1 USEast1 USEast2 |

USGovCloudEast1 USGovCloudWest1 USIsoEast1 USIsoWest1 USIsobEast1 USWest1 USWest2 |

Configuration for Destination host

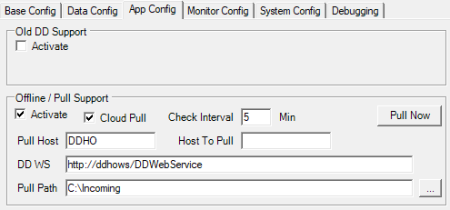

- In the Configuration Tool, the App Config tab, activate Offline / Pull Support and Cloud Pull on all destination hosts that will be pulling data from Azure.

- Set DD WS to the source DD Web service URL running at HO.

- If the DD Web Service (IIS) is not running on the same host as the DD Service at the HO, set Pull Host as the host name where the DD Service is running. Check Interval is the frequency of how often DD will connect to the source to check for new jobs.

- If the Distribution Server name for the destination host does not fully match the actual host name, the destination host name can be put in Host to Pull. This will be used to look up jobs in Cloud Storage. This should match the Host name set in the Distribution Location card for the destination.

- Set the Pull Path that will be used to download files to, and Manual Import Service will pick the files up to import them into the DD queue.

- Click the Pull Now button to let DD send a pull request right away and override the Interval timer. This will start a DDPullMon program that triggers a check for new jobs and also shows the status of the incoming jobs. This program can also be executed directly from the bin folder where DD is installed to trigger a request from, for example, POS.

- In Distribution Location setups, for all destination hosts that will be offline, change the Data Director Mode to AZURE, and type the host name used when pulling in the Distribution Server Name field. The format for the host path is the host name with the default port number and an :AZURE mode ending [STORE3:16860:AZURE]

Sending jobs from offline host to source (HO)

If you plan to run Scheduler at the offline destination host and send jobs to source host or Head Office, or push transactions to HO with DD Push, HO will not be able to send a status reply back to the offline host, as the IP address is unknown and TCP port may be blocked.

We recommend to send transactions from POS to HO using LS Central Web Service Push mode in Functionality Profile, after every sale.