In this article

Like in all systems, issues can occur in Analytics for LS Central. This article explains how to troubleshoot various issues that can occur during setup and operation of Analytics, and there is a special section on how to troubleshoot missing data issues.

Setup

Sometimes Analytics deployment can result in errors, usually because some steps were skipped in the onboarding process. Usually, when this happens, errors are displayed in the PowerShell client. Here are the most common errors and the solutions to them.

File not trusted

In some cases, PowerShell will not trust the files from the ZIP, and will produce an error similar to the following:

Run only scripts that you trust. While scripts from the internet can be useful, this script can potentially harm your computer. If you trust this script, use the Unblock-File cmdlet to allow the script to run without this warning message. Do you want to run ... [D] Do not run [R] Run once [S] Suspend [?] Help (default is "D"):

To fix the error, you need to run the following command in the base folder of the Analytics product package:

dir -Path . -Recurse | Unblock-File

Azure module too old

The Analytics PowerShell deployment modules need to have the correct Az powershell module setup. If your Az module is too old, you will get the following error when the deployment script is creating the SQL database in Azure:

Creating a new Azure SQL database. This might take a few minutes... WARNING: Something went wrong :( Cannot bind argument to parameter 'StatusMessage' because it is an empty string.

You need to run PowerShell/VS code "as administrator" to be able to check and update the module. To check what Az module is installed, run the following in PowerShell:

Get-InstalledModule Az

This should list all modules that are installed and the Az module should be on top. The version there should be 5.7. If it is not, you can run the following command to update the Az module:

Install-Module -Name Az -RequiredVersion 5.7.0

You can then run the Get-InstalledModule Az again to check if the module has been updated to 5.7, and if it has, you are ready to re-run the deployment script.

Connecting to new subscription

Sometimes, if you have connected to a different subscription with a different user on the machine you are using to run the deployment script, you can run into errors when connecting to Azure. This is usually related to a subscription being set as the default subscription, and then the new user you are trying to use does not have access to that subscription. To fix this you can connect to Az-Account and set the new subscription ID as a parameter on the connection.

Connect-AzAccount -Tenant 'Tenant/ParentManagementGroupIDfromAzure' -Subscription 'SubscriptionIDfromAzure'

One of our consultants also had an error where he was unable to log into his Azure account when prompted and always got an error. This was solved by following some instructions online regarding the JWS login error and then logging out of the account in Edge and clearing the cache.

Wrong password provided for admin user on existing SQL server in Azure

If you decide to deploy the Analytics Data Warehouse database to an existing SQL server in Azure, you need to enter a user name and password for that server. If you provide the wrong credentials and the script fails to restore the Analytics DW to the server, you will get the following error when running the deployment script:

Creating a new Azure SQL database. This might take a few minutes... WARNING: Something went wrong :( Response status code does not indicate success: 400 (Bad request).

To double-check what is happening, you can go into the SQL server in Azure portal and check the Import/Export history. There you should be able to find the database you were importing, and if you click the database name link you should see the reason why the import failed.

To recover from this you need to run the deployment script again, and make sure you have the correct user name and password for the SQL server. If you run the deployment script again without closing VS Code, it will retain all the parameters except passwords, so if you entered the wrong user name, you must make sure you select not to reuse the one you entered before. You will be prompted for reuse of each parameter by the script.

Azure not responding

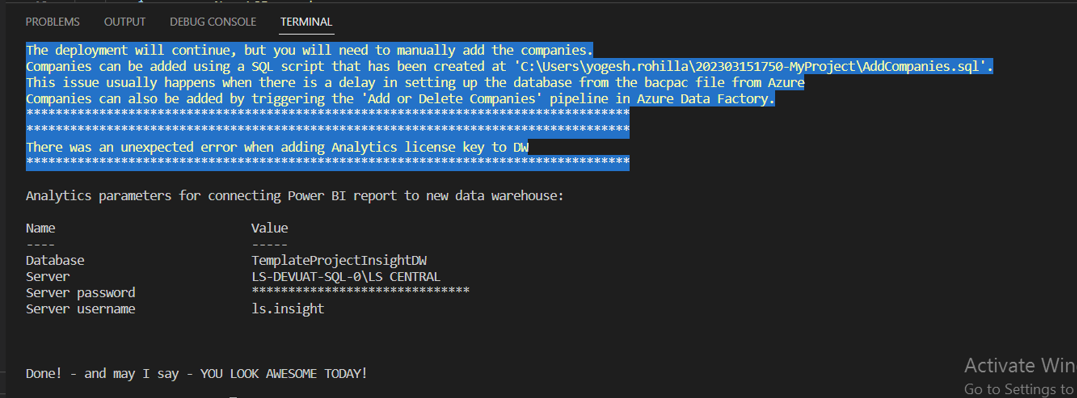

Occasionally, Azure takes a really long time to create the SQL database. The deployment script has an inbuilt timeout to prevent the deployment taking too long. If that occurs, you should see output similar to the one below during the deployment:

If this happens, you will need to wait for the database deployment to finish in Azure. You should be able to see the progress in the Azure Portal > SQL server > Import/Export section. After the import is done, you can run the script which was created by the deployment by following these steps before continuing with the deployment:

- In Azure Portal, open the SQL server from resources.

- From left menu, select Settings - Import/Export history.

- Click the line for the database, and it will show the status and start time.

- The import should finish within a few hours. If it fails, you can just delete all the resources created and run the deployment script again.

Once the database import has completed, you need to manually add the companies before triggering the Initial load in the Azure Data Factory. An SQL script file named AddCompanies.sql has been created in the My-Project folder in the Base folder, that can be used on the Analytics database to add the companies. The script executes the stored procedure [dbo].[LSInsight$InsertCompany] with the name of each company.

You do not need to worry about the license key not being added, Analytics will work regardless, and the key will be added when the Analytics environment is next updated.

Operation - pipeline errors

There can be several reasons why a pipeline fails to run. If you hover the mouse over the talk bubble icon in the error column of the pipeline monitor page, the error is displayed. Here are the most common ones and solutions to them.

Connection to LS Central source database fails

Operation on target LookupCurrentWaterMark failed: ErrorCode=SqlFailedToConnect,

'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Cannot connect to SQL Database:

'LSR000054',Database: 'mydatabase2', User: 'skynet'. Check the linked service configuration is correct,

and make sure the SQL Database firewall allows the integration runtime to access.,Source=Microsoft.DataTransfer.

ClientLibrary,''Type=System.Data.SqlClient.SqlException,Message=Cannot open database "mydatabase2" requested by

the login. The login failed. Login failed for user 'skynet'.,Source=.Net SqlClient Data Provider,

SqlErrorNumber=4060,Class=11,ErrorCode=-2146232060,State=1,Errors=[{Class=11,Number=4060,State=1,Message=Cannot

open database "mydatabase2" requested by the login. The login failed.,},

{Class=14,Number=18456,State=1,Message=Login failed for user 'skynet'.,},],'

When this error occurs, something is wrong with the LS Central source information in the ADF. You must check the following parameters:

- $SourceServerName

- $SourceDatabaseName

- $SourceUserName

- $SourcePassword

Make sure that you have the correct server and database names and that you are using SQL user, and not Windows user, user credentials. Analytics does not allow the use of Windows user credentials, so you need to create or use an SQL user that has read permission to the source database.

To make sure that your server, database, and user credentials are working, you can connect through SQL Server Management Studio on the machine where you are going to set up the integration runtime gateway, and verify that you can connect to and run a SQL query on the database or do test connection from the LSCentralSource linked service in the ADF.

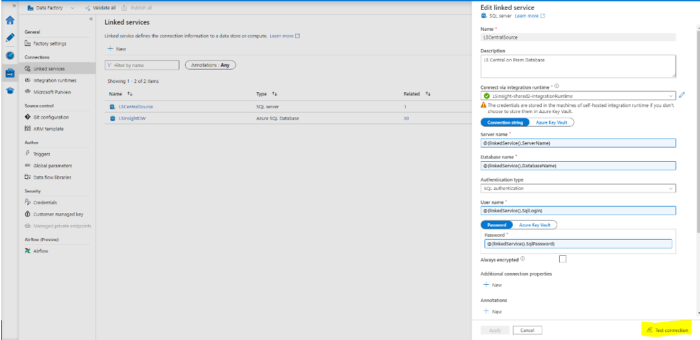

Azure Data Factory fails at the start

If the ADF pipeline fails at the first step with a timeout error, there is probably some issue with the linked service connection. It is best to test both linked service connections in the Azure Data Factory studio in the Azure portal.

If either connection fails, please reiterate through the steps in the onboarding help in order to get the linked service and integration runtime working. The customer might also need to check firewall settings on their side to see if a firewall is blocking the connection to the LS Central server.

Integration runtime gateway turned off

Operation on target LookupCurrentWaterMark failed: The Self-hosted Integration Runtime 'LSInsight-integrationRuntime' is offline,last connect time is '06/03/2020 16:43:56.832'.

When this error occurs in a pipeline run the machine, where the integration runtime gateway is running, has been turned off. It is very important that the machine, where the gateway is set up, is up and running when the pipelines are scheduled to run. This machine also needs to have access to the source database using the credentials provided in the parameter file.

Populate dCompany failed

The following error message or something similar is fairly common:

Operation on target Populate dCompany failed: Execution fail against sql server. Please contact SQL Server team if you need further support. Sql error number: 208. Error Message: Invalid object name 'stg$Accounting Period'.

This is usually because the name of the company (or companies) is missing from the LSInsight$Companies table. If there was an error during the deployment of Analytics, the deployment script should have created an SQL script to insert the companies selected during the deployment. Otherwise, it is also possible to add the companies with the “Add or Delete Companies” pipeline in ADF.

Replication - LS Central SaaS specific errors

The SaaS replication process can be fragile and errors sometimes occur during replication. Even if a replication job is started from LS Central, it can fail during run in the Data Director. It is always a good idea when setting up the replication of the Analytics jobs to open the Configuration tool and the job monitor and monitor the first few runs of the jobs. Below are some errors that can occur when replicating and solutions to them.

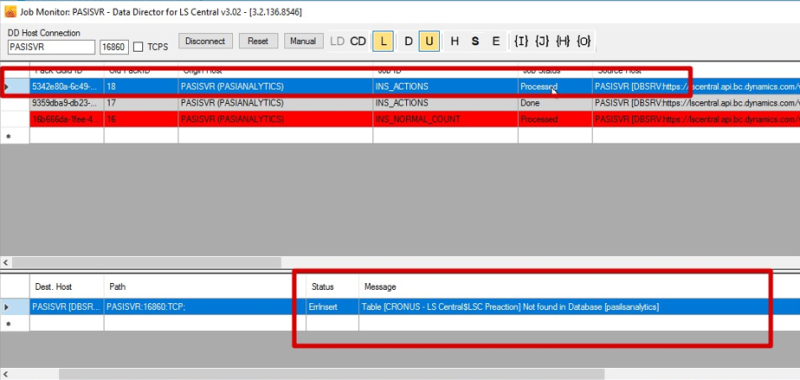

LSC Preaction table not found in database

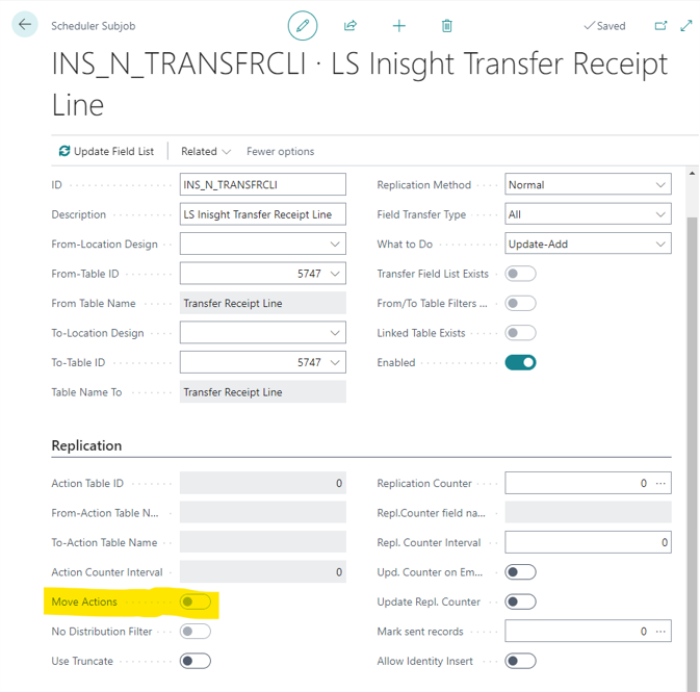

This error is known to occur in the replication jobs, usually in the INS_ACTIONS or the INS_NORMAL_COUNT jobs. This error is because one (or more) of the subjobs has the “Move actions” flag enabled.

To fix this issue:

- You must open LS Central on the scheduler server, open the Scheduler Subjob List page, and filter on job ID INS_A (that should show all INS_ACTIONS subjobs).

- Open all the subjobs, and check if the Move Actions flag is enabled. Do the same for all jobs with ID starting with INS_NWC (should show all subjobs for INS_NORMAL_COUNT).

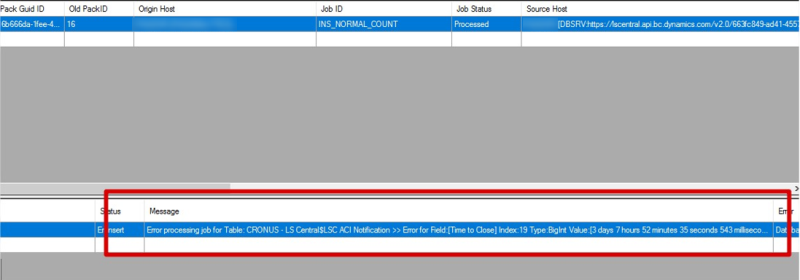

Error for Field [Time to Close]

This error is caused by a bug in LS Central. This bug is fixed in LS Central version 22, so if customers receive this error, they probably need to update their scheduler server environment.

This error can occur on any field of type Duration.

There was also an issue with empty duration fields that was fixed with version 3.02.138 of Data Director.

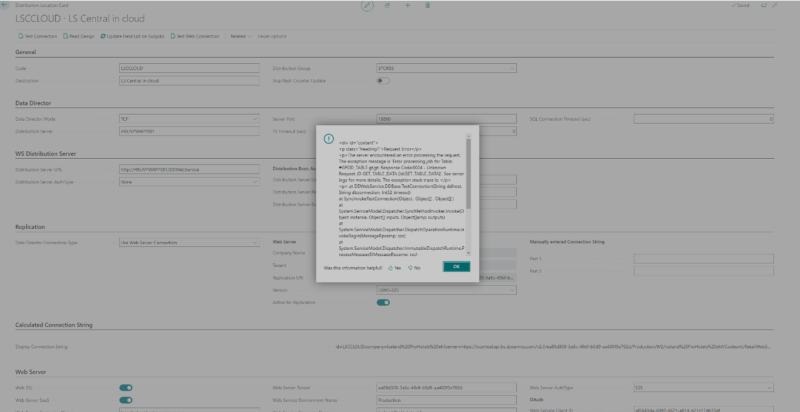

Error when v1 web request is missing

This error is caused by missing web service requests in the SaaS environment. The online help explains how to publish these requests and those steps need to be revisited if this error appears when running the jobs.

You can check what web requests are available in SaaS by going to LS Central:

- Navigate to Web Service Setup, and select web Request 1.0.

If everything is normal this list should be populated with several web requests and GET_TABLE_DATA and GET_TABLE_DATA_BY_PRIMARY_KEY should be among them.

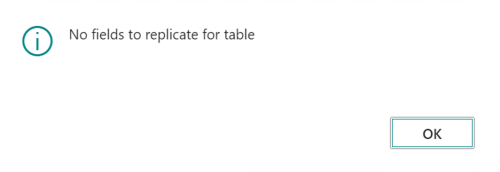

Version mismatch causes missing fields

If the SaaS and on premises LS Central environments do not have the exact same version, it can cause an error in Data Director when replicating.

If this happens, you need to check ‘ignore extra fields’ in the DD configuration tool and the job will run.

In the example, the field ‘Allow multiple posting groups’ existed in the on-premises database but not in SaaS.

Missing data

Sometimes when you think you have completed the setup of Analytics for LS Central, the reports are still only showing partial or no data. There can be multiple reasons for missing data, and therefore the troubleshooting process is explained here, step-by-step.

Reset Data warehouse

The factory reset pipeline in the Azure Data Factory is very useful when there are some problems with the data. As the name suggests, it recreates the whole data warehouse by dropping all staging tables and truncating dimension- and fact-tables, it updates the query base, and finally re-fetches all data from LS Central and populates the dimension- and fact-tables again. It is vital that there is no other pipeline running while the Factory reset pipeline is ongoing.

The Factory reset pipeline is very useful in the following scenarios:

- There are duplicate rows in the staging tables that cause errors in fact table procedures.

This is most likely due to the fact that two pipelines were running at the same time. - Data has been imported into the LS Central environment with old timestamps.

This will cause gaps in fact tables since they only process the staging tables based on the newest timestamp in the previous run. - After extending Analytics, it is always best to run a Factory reset.

The factory reset will not delete from pre-staging tables, but reset the query base, staging tables and dimension and fact tables.

Update Analytics

All releases of Analytics contain an update package for the previous version. This is to enable customers to easily update their current Analytics environment without having to set up everything from scratch.

Note: The update package is only compatible with the previous version, it will not work with older versions.

It is best if you have access to the parameter file created during the initial deployment of Analytics but it is not necessary. The update process is very similar to the deployment, the same sets of parameters are needed to complete the update. The difference is that the update package will update the existing database and ADF instead of recreating them.

The database update package contains a set of scripts. The scripts contain all new database objects along with changes to the existing objects, if needed. This includes tables and stored procedures as well as updates to Analytics metadata tables.

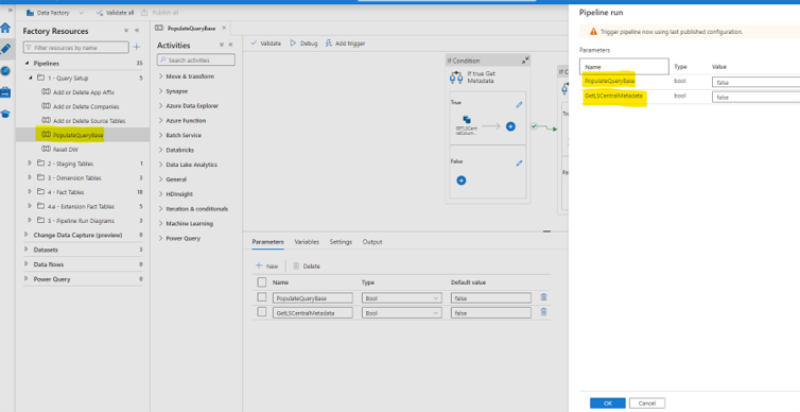

If there are new staging tables added to the Analytics database, you will need to run the Populate Querybase pipeline in ADF in order to create the table and the SQL commands needed to copy and transform the data from LS Central to Analytics. The Populate Querybase pipeline has two input parameters, PopulateQueryBase and GetLSCentralMetadata. Set the PopulateQueryBase parameter to TRUE, the GetLSCentralMetadata should only be set to TRUE if there are new tables added to LS Central that need to be added as a staging table in the Analytics database.

Once PopulateQueryBase pipeline has finished running, you should trigger the Scheduled run pipeline to refresh the data in Analytics.