In this article

The Analytics for LS Central setup process creates the Azure Data Factory (ADF). The ADF contains everything needed to populate the star schema dimensions and facts in the Data Warehouse. This article describes all the different factory resources and how they are used.

Note: This is a detailed description of the ADF components to give you a better understanding of what happens where. Everything about how to use the ADF when setting up Analytics is explained in the onboarding process.

This page refers to the ADF as it is in Analytics version 2025.1 and later. If you have an older version of Analytics you can refer to the old ADF description.

Overview

The components are:

- Integration runtime gateway

- Linked services

- Pipelines

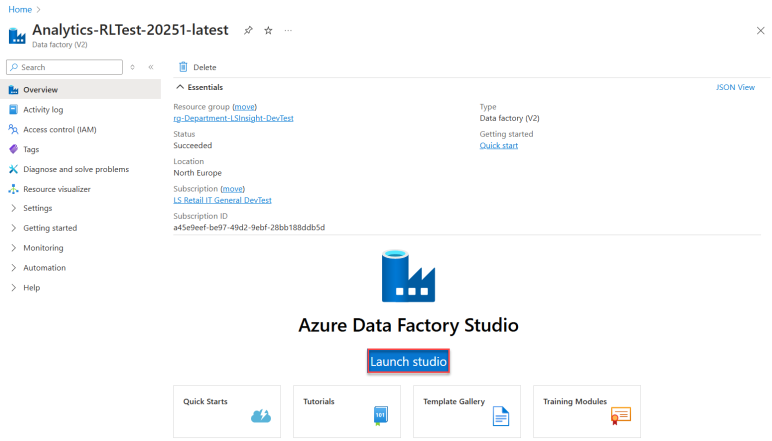

To view these components in the Azure portal you need to open the ADF from the resource list and then select Launch studio from the overview page.

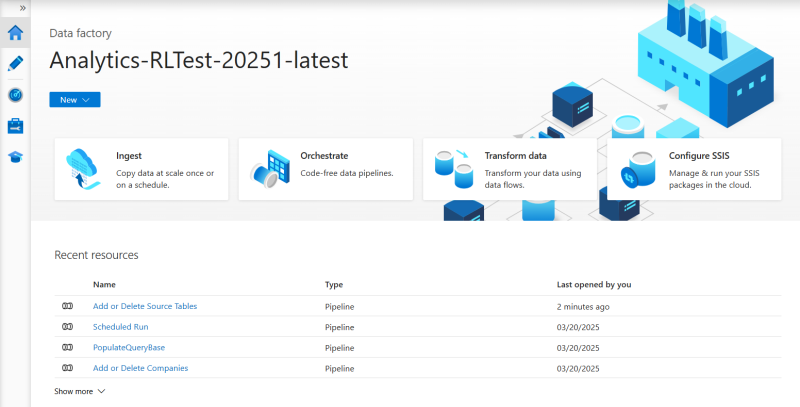

When you launch the Azure data factory studio view, you see a new tab in your browser and get access to the data factory menu that is referenced many times in this ADF description. The menu consists of icons and labels that lead to different sections.

Data Factory - Home

This is a generic section where you can access videos and knowledge about how to get started with ADF. Since the ADF is created by the deployment script, you can just use this information if you want to explore the options of ADFs further.

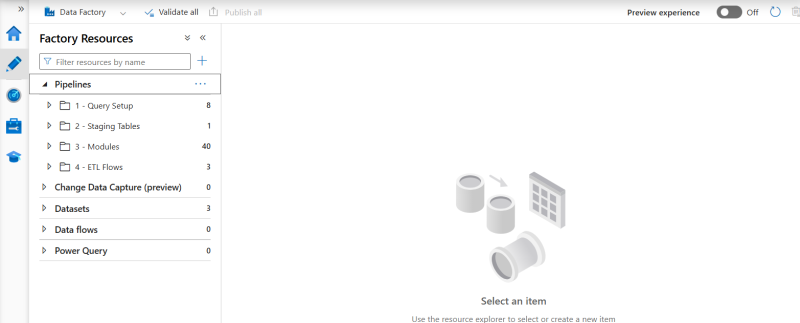

Author

The Author section is the heart of the ADF. In this section you have access to the pipelines that are an essential part of the Analytics ETL processes.

Pipelines

The Analytics pipelines are arranged in a folder structure by where in the process they are used. Some pipelines query the LS Central source database, while others move data between tables in the Data Warehouse by running stored procedures from the DW database. Other pipelines are created to control the order the pipelines are run in. Their only purpose is to execute other pipelines.

This folder contains six pipelines.

Add or Delete App Affix

When this pipeline is triggered, you can add or delete an App Affix from the Analytics$PublisherAffixReg table. The AppID, AppName, and Publisher parameters are required but you only have to provide either a Prefix or Suffix. If you set the deleteApp parameter to TRUE, you only have to provide the AppID to delete the App registration from the table. For LS Central versions that have extension GUIDs you must register all extensions you want to use in Analytics in this table. If a registration does not exist, no data will be loaded from the extension table to Analytics.

Add or Delete Companies

When this pipeline is triggered, you can add a company name to be added to or deleted from the Companies table. The DeleteCompanies parameter decides whether the company is added or deleted. You can add more than one company at a time; you just need to separate the names by a comma in the Companies field.

Example:

This pipeline is not used, unless you want to trigger it manually to add a new company to your current Analytics instance. If you do that you need to trigger the PopulateQueryBase pipeline as well to build the queries for the new company.

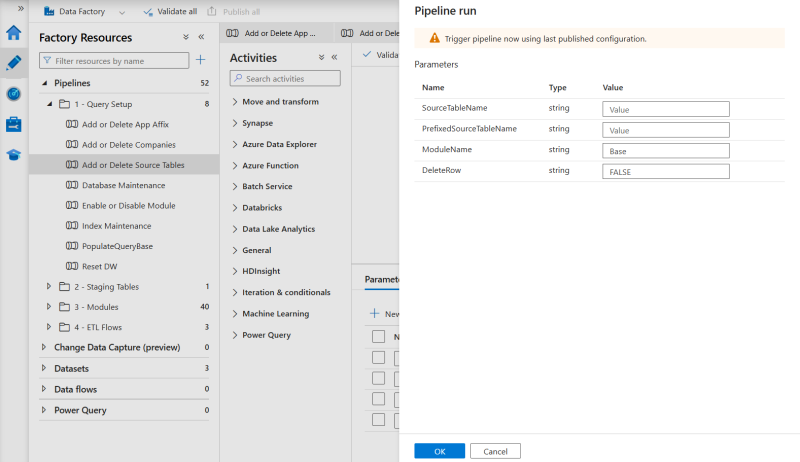

Add or Delete Source Tables

When the extension table affixes were introduced in version 17.5, some of the extension table names had to be shortened. Instead of changing all our stored procedures and having different procedures between versions, a map was created from the old table names to the new ones. This means that the new short or prefixed names can be changed back to the older or more simple names to use in our data warehouse staging tables.

When this pipeline is triggered, you can add a source table name to be added to or deleted from the Analytics$SourceTablesMap table. You need to provide a short table name that will be used as the staging table name, usually this is the name of table in older versions of LS Central. If you add a table that is added by LS Central or another extension, you need to add the actual table name with the extension prefix as well. You then need to add the name of the Module that the source table belongs to. You can find the modules names that are a part of the standard Analytics in the Enable or Disable module section below or you can create your own if you are creating a custom module. The DeleteRow parameter decides whether the Source table name is added or deleted.

Database Maintenance

The Database maintenance pipeline runs a stored procedure called AzureSQLMaintenance that has been created based on a script from the MS community that updates statistics and does index maintenance on the Analytics Azure SQL database.

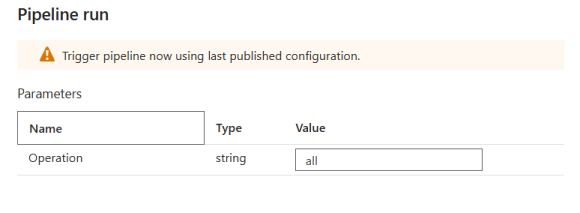

It has one Operation parameter that controls what components are run. The default is all but you other values you can use are index or statistics if you only want to do one or the other.

Enable or Disable Module

This pipeline can be run to enable and disable Analytics modules.

There are five modules and they roughly correspond to the Analytics report templates.

- Base - All dimension data for Sales and Inventory and Supply chain reports + fact tables for Sales and ACI reports

- Inventory - No additional dimensions - fact tables for Inventory and Supply chain reports

- Hospitality - Additional dimensions and fact tables used in Hospitality Analytics report

- Bookings - Additional dimensions and fact tables used in Booking Analytics report

- Hotels - Additional dimensions and fact tables used in Hotel Analytics report

When you setup a new Analytics environment all modules are enabled by default so the best course of action is to disable the modules you do not want to use before the initial load is run. But the pipeline can also be used to disable or enable modules after the environment has been running for some time.

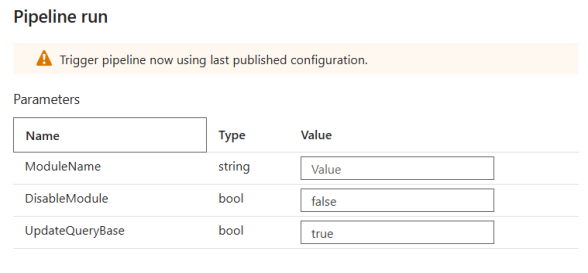

When you run the pipeline you need select the module name and whether you want to disable or not.

The third parameter of the pipeline is whether or not to update the query base. If you are enabling a module you need to set this as true to start staging data. If the queries for the staging of the source tables belonging to this module do not exist no data will be staged by the next Scheduled run. And the same if you are disabling a module and want to stop staging data from the module source tables then you need to set this parameter as true. If you do not the query base will not be updated and data will be staged even if the module has been disabled and no more data will be loaded to fact or dimension tables belonging to this module.

Disabling a module will not delete any data from staging or data warehouse fact and dimension tables. If you want to remove data you need to disable the module and then run Factory reset. The Factory reset pipeline respects which modules are enabled and disabled.

Index Maintenance

The Index maintenance pipeline runs a stored procedure called usp_AdaptiveIndexDefrag that has been created based on a script from the MS community that does index maintenance on the Analytics Azure SQL database. This pipeline has no parameters.

PopulateQueryBase

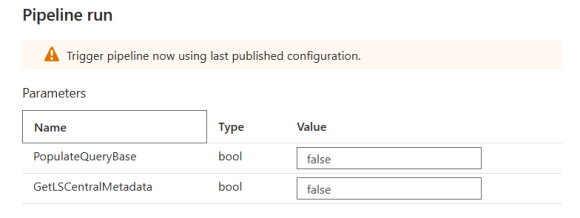

This pipeline has two parts that are by default run one after the other, but can be run separately, if needed.

The first part is Get Metadata. Here a query is sent to theLS Central source database to collect information about all tables and fields.

This meta data is then used in the second stage Populate Query Base to create the staging queries for all tables listed in the Analytics$SourceTable table and adding them to the Analytics$QUERYBASE table.

After the pipeline has run, the Analytics$QUERYBASE has been populated with creation scripts for the staging tables, where the Business Central (BC) base table has been combined with all extension tables with the same name, into one staging table.

The query base also contains select queries used by the All Staging Tables pipeline to populate the staging tables.

This pipeline is run once during the onboarding process and does not need to be run again, unless LS Central is updated with new extensions that should be added to Analytics, or if you want to add a new staging table to extend Analytics beyond the standard version provided by LS Retail.

The Extending Analytics section of the Analytics online help explains in further detail how to extend your instance, since the extension steps differ depending on which LS Central platform you are running on.

Reset Analytics DW

This pipeline runs a stored procedure on the database that deletes all retail data from the database, without deleting any of the necessary meta data that is included when the database is created from the template. This pipeline should always be run as a part of the Factory reset pipeline.

This folder only contains one pipeline since the staging process is dynamic and controlled by the data in the Analytics$SourceTablesMap table.

All Staging Tables

This pipeline is run as part of the Scheduled Run pipeline that should be scheduled to run every day.

It starts by getting the first company from the Companies table, and then looping through all tables in Analytics$SourceTablesMap that have include = true, then creates the staging tables and populating them with data from the LS Central source.

The same thing is then done for all additional companies, LS Central is queried and the staging tables are populated with information from each company.

The RowID of the last staging table is then stored in the AnalyticsAudit table to ensure incremental load when applicable.

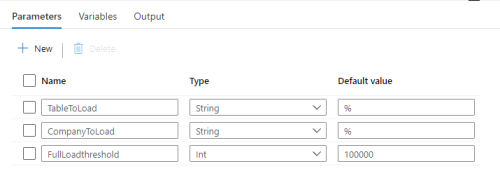

This pipeline has a few parameters that can be used for testing. The default values are shown in the image:

-

TableToLoad - Here you can add the name of one or more tables that should be loaded. Here you do not provide the table names separated by comma, but instead supply the string used for a "like" comparison on the table name. So if you were to add something like '%Item%' to the parameter field, the pipeline would run for all tables that contained the word 'Item'.

-

CompanyToLoad - This parameters works exactly the same as the TableToLoad, except that it filters companies, not tables.

-

FullLoadthreshold - The full load threshold determines how many rows a table can have before doing an incremental load instead of a full load of the table from source to staging. This is because the incremental load always has some cost, so it is not worth doing an incremental load for tables with relatively few rows. You only want to do incremental load on tables with many rows and where many rows are being added each day, like the transaction and entry tables.

This folder contains all the pipelines used to load data into the data warehouse dimension and fact tables. It contains the module wrapper pipelines and sub folders for the dimension table module pipelines, extension fact table pipelines and the standard fact table pipelines. None of these pipelines should be run individually and the folders are just used for better organization of the ADF.

For each module there exists a wrapper pipeline that is included in the Scheduled run pipeline. The Base module pipeline is always run and contains the fact table pipelines that are a part of the Base module.

Bookings, Hospitality and Inventory module pipelines run as a part of Scheduled run pipeline if the modules are enabled and Hotels module pipeline is only run if the hotel extension is installed and the module is enabled.

For these for pipelines the wrapper pipeline runs both dimension and fact tables that belong to that module and are not used by other modules. Shared dimensions are always run as a part of the Base module.

The Dimension folder contains several pipelines. These pipeline are run as part of the Module wrapper pipelines and Scheduled run pipeline (Base module).

Base Dimension

This pipeline starts by populating the dCompany dimension table. It then runs through all the stored procedures in the Data Warehouse database that start with dimMerged. Those stored procedures (SP) usually combine several staging tables to create the dimension table and add and update data as needed.

Base odSpecialGroup

This pipeline runs the stored procedure for the odMemberAttributes dimension. This is an out trigger dimensions and must be run after other dimension tables have been updated, because it combines data from staging tables and the dMember dimension.

Base odMemberAttributes

This pipeline runs the stored procedure for the odMemberAttributes dimension. This is an out trigger dimensions and must be run after other dimension tables have been updated, because it combines data from staging tables and the dMember dimension.

Booking Dimensions

This pipeline runs through all the stored procedures in the Data Warehouse database that start with dimBookingsMerged. Those stored procedures (SP) usually combine several staging tables to create the dimension table and add and update data as needed.

Hospitality Dimensions

This pipeline runs through all the stored procedures in the Data Warehouse database that start with dimHospitalityMerged. Those stored procedures (SP) usually combine several staging tables to create the dimension table and add and update data as needed.

Hotels Dimensions

This pipeline runs through all the stored procedures in the Data Warehouse database that start with dimHotelsMerged. Those stored procedures (SP) usually combine several staging tables to create the dimension table and add and update data as needed.

You can find all the stored procedures in the database under Programmability - Stored Procedures.

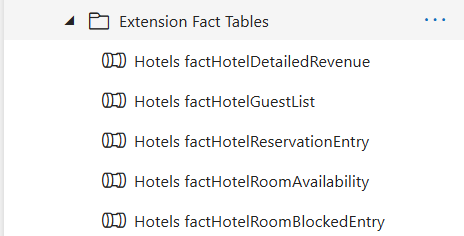

There are five extension fact table pipelines and both of them are for the LS Central Hotels extension. This is the only available extension for LS Central from LS Retail, but more are coming.

If the Hotel source tables are enabled in the Analytics$SourceTablesMap table and the extension and tables exist in the LS Central database, the data is automatically loaded into the data warehouse so no special setup is needed. The tables are enabled by default in standard Analytics but if they are disabled they can be enabled by enabling the Hotels module. All the tables and stored procedures for Hotels already exist in the data warehouse template.

In this folder the fact table pipelines are kept, that are part of standard LS Central. The pipeline are divided between the four remaining modules Base, Bookings, Hospitality and Inventory. These fact table pipelines are always run by the module wrapper pipelines that run as part of the Scheduled Run pipeline that should be scheduled to run every day. They should not be manually triggered.

This folder contains the pipeline that should be run to run all the other pipelines listed above. Most of pipelines listed above should never be run individually, but they were categorized into folders and explained here, so you are familiar with the processes and able to extend, if needed.

For information about how to extend the data warehouse and what pipelines to run after you extend, see the Extending Analytics section of the online help.

Factory reset

This pipeline first executes the Reset Analytics DW pipeline to clean all retail data from the data warehouse. Then it runs the Initial load pipeline to fully populate Analytics with data from LS Central.

If you are experiencing some data issues in the data warehouse, that might be caused by importing data to LS Central from an external system, or if you have at some point changed the LS Central source, it is good to run Factory reset to make sure that the issues you are experiencing are not caused by data mismatch between LS Central and Analytics.

Note:The Factory reset pipeline deletes all data from staging-, dimension- and fact tables and re-fetches all data from LS Central or pre-staging tables. Therefore, it is very important to plan this very well so that it does not have a negative effect on the LS Central system. When running the Factory reset pipeline, you must make sure that there is no other pipeline active and you must also disable the Scheduled run trigger to prevent the pipelines from overlapping each other. LS Retailalso recommends that users scale their Azure Analytics database to a higher service tier while running the Factory reset to complete it faster.

Initial load

This pipeline executes the Populate Query Base and Scheduled Run pipelines and it should only be manually triggered during the initial setup of Analytics.

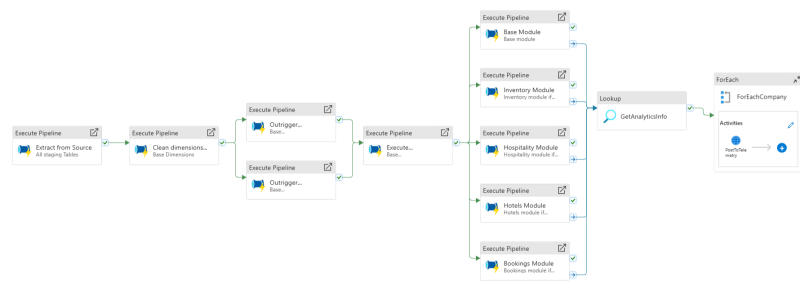

Scheduled Run

Like the name suggests this is the pipeline that should be scheduled to run once every 24 hours.

This pipeline executes all the other pipelines needed to load the data warehouse.

Datasets

There are three data sets in the Datasets folder and they are used in different lookup activities in the pipelines.

LSCentralColumnMetadata

AnalyticsDW

SourceDataset

Monitor

A special page is dedicated to Monitoring pipelines while they run. This goes both for scheduled pipelines and monitoring of manually triggered pipelines and pipeline chains.

Manage

The Manage section of the Azure Data Factory contains the Integration runtimes gateway and the Linked services. Both play a part in the connection from Azure to the LS Central source database and to the data warehouse.

Integration runtime gateway

The manual setup of the Analytics-IntegrationRuntime gateway is explained in the onboarding process. It is only needed when LS Central is on-premises, otherwise the AutoResolvedIntegrationRuntime is used.

Tip: Microsoft has some extensive documentation about the integration runtime.

Linked services

The onboarding process creates the ADF with two linked services.

LS CentralSource

This linked service stores the connection to the LS Central source database and utilizes the Analytics-integrationRuntime for that connection.

AnalyticsDW

This linked service stores the connection to the Analytics DW database.

If the database is located in Azure, it uses the AutoResolvedIntegrationRuntime to connect to the Azure SQL database, but if the DW is located on-premises within the same domain as the LS Central database, it can utilize the Analytics-IntegrationRuntime that was created during the Analytics setup.